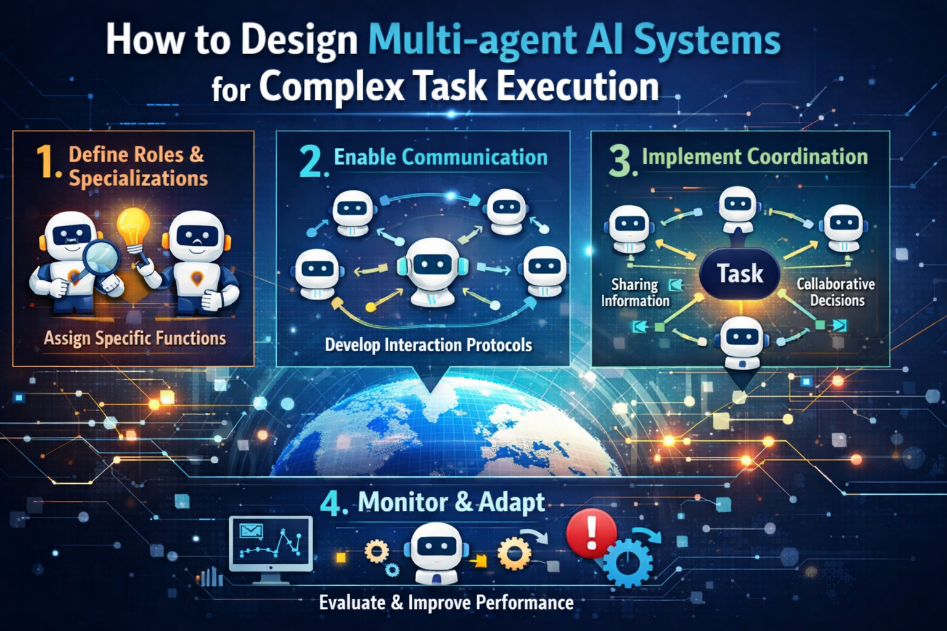

As artificial intelligence systems grow more capable, many real problems can no longer be handled by a single model. Complex tasks often involve planning, and continuous adjustment, this is where multi-agent AI systems become useful. Instead of relying on one large model, work is divided across multiple agents that collaborate toward a shared goal.

Learners who begin with an Agentic AI Course are often introduced to this idea early. They learn that intelligence in real systems is not just about prediction, but about how different components work together.

What Are Multi-Agent AI Systems?

A multi-agent AI system consists of multiple independent agents that makes interaction with each other. Each agent has a specific role, responsibility, others analyze.

Rather than one system doing everything, tasks are divided in chunks, which makes the overall system easier.

For example, one agent might monitor user input, while a third decides the follow up action, they altogether produce results that can be handled by a model.

Why Are Multi-Agent Systems Needed for Complex Tasks?

Real-world problems are rarely simple, they often involve uncertainty, and multiple objectives. A single AI model usually focuses on one type of task at a time, where Multi-agent systems allow different agents to specialize and coordinate.

Common reasons organizations adopt multi-agent systems include:

- Handling long workflows with many steps.

- Managing competing goals or constraints.

- Adapting to changing inputs in real time.

- Scaling decision making across systems.

During Generative AI Online Training, learners often explore how agents communicate, and adjust behavior when conditions change. This helps them understand why agent-based design is becoming more common.

Core Components of a Multi-Agent System

Most multi-agent systems include a few basic components.

- Agents themselves are the core units, each agent has its own logic, and decision rules. Some agents are reactive, responding to events, while others plan ahead.

- Communication mechanisms allow agents to share information, this could be through messages, or signals.

- Coordination logic ensures agents do not work against each other, where rules define how conflicts are resolved with prioritizing decisions.

- A control layer may exist to monitor the system and ensure it stays aligned with overall goals.

- Understanding these parts helps designers avoid chaotic behavior where agents act on their own avoiding alignment.

Designing Roles and Responsibilities

One of the most important steps in building a multi-agent system is defining clear roles. When agents have overlapping responsibilities, systems become unpredictable.

Good design starts by asking:

- What tasks can be separated?

- Which agents need to cooperate?

- Where should decisions be centralized or distributed?

Learners in an Artificial Intelligence Online Course in India often practice breaking down business with technical problems into agent roles. This teaches them to think in systems rather than isolated models.

Clear roles also make systems easier to debug and improve over time.

Coordination and Conflict Handling

When multiple agents act together, conflicts are unavoidable, where two agents may suggest different actions based on their own goals.

Effective systems include coordination strategies such as:

- Priority rules.

- Voting mechanisms.

- Supervisor agents.

- Shared constraints.

These strategies help the system consider repeated actions instead of switching between decisions. Without coordination, multi-agent systems can become unstable, even in cass where each agent performs well.

Scalability and Adaptability

One advantage of multi-agent systems is scalability, where new agents can be added as tasks grow without redesigning the system.

Agents can also be updated on their own, if one part of the system needs improvement, it can be modified.

This modular structure makes multi-agent systems suitable for long-term use in dynamic environments.

Real-World Applications

Multi-agent AI systems are used in many areas today:

- Autonomous operations with robotics.

- Recommendation with personalization systems.

- Supply chain optimization.

- Financial monitoring with risk management.

- Intelligent assistants handling complex workflows.

In each case, no single model controls everything, rather coordination between agents enables smarter outcomes.

Challenges in Multi-Agent Design

While multi-agent systems are harder to design and test, the challenges go beyond individual agents. As the number of agents increases, interactions multiply, making system behavior less predictable. Small logic gaps can lead to large downstream effects that are difficult to diagnose.

Common challenges in multi-agent design include:

- Unexpected agent behavior when goals conflict.

- Communication delays or message loss between agents.

- Difficulty tracing decisions across multiple agents.

- Increased system complexity as coordination grows.

This is why training and structured design matter, where learners must understand how agents interact, and recover from failures so the system behaves reliably as a whole.

Conclusion

Designing multi-agent AI systems is about coordination with long-term reliability, by dividing responsibilities across agents and guiding them.

With proper training through mentioned courses, multi-agent systems allow AI to move beyond isolated predictions and into real decision-making environments. As AI systems continue to evolve, this approach will play an increasingly important role in how intelligent solutions are built and deployed.